Is Your Analytics Ready for An AI-First Product?

Product and engineering teams face new challenges when building AI-first products. A modern digital analytics platform offers solutions.

For a long time, software products have followed common conventions:

- Users navigated between content and tasks via menus

- Users viewed screens or pages to access pre-existing information

- Users clicked buttons to instruct the UI about their wishes

- Builders ran A/B tests to compare two versions of a UI

- Builders implemented basic user personalization, but were limited to a discrete number of experiences, or sorting order of products/content

During that period, day-to-day use of AI/ML applications was limited to use cases like search engine results, simple chatbot conversations, or “you might also like” product recommendations.

However, in the last three years, AI has rapidly increased in sophistication and accessibility. Suddenly, products have moved from deterministic to probabilistic. Nearly every digital product we use (e.g., booking travel, shopping for shoes, preparing documents, etc.) is infused with AI, which changes the content we view and even the interface we interact with. Most apps now offer a chat-first interface where text commands replace points and clicks. Some even include agentic workflows that find information and take action, stringing together a series of tasks and communicating with third-party services.

The impact of this shift on modern product development is significant across many workstreams, including UX, design, engineering, and analytics. Users are less likely to follow a single “golden path” between discrete pages. Since they operate outside standard conversion funnels, it’s much harder for analytics teams to follow their specific journey.

In this post, I’ll share three key challenges faced by product and engineering teams building AI-first products, followed by the must-have solutions a modern digital analytics platform like Amplitude offers. We’ll also see examples of the new types of questions analytics teams need to be able to answer when building AI-first products.

Challenge 1: Outdated measurement tools

In most AI-based workflows, a successful outcome is hard to measure because it isn’t represented by a specific page view or button click. Teams can track correlated downstream metrics like revenue or retention, but traditional analytics tools can’t link movement on these metrics directly to new AI-based workflows.

Solutions:

- Evals as events and properties: Leading product teams use a combination of objective, deterministic tests and LLM-as-a-judge processes as “evals” for user journey success metrics. Amplitude events or event properties capture user behavior and allow for meaningful monitoring, investigation, and trend analysis. Amplitude’s NLP can categorize conversations into topics, use cases, or themes, then score the outcome as a success or failure. Teams can use Amplitude to answer a range of new questions that embrace more complex user journeys, like:

- What percent of conversations have a positive outcome?

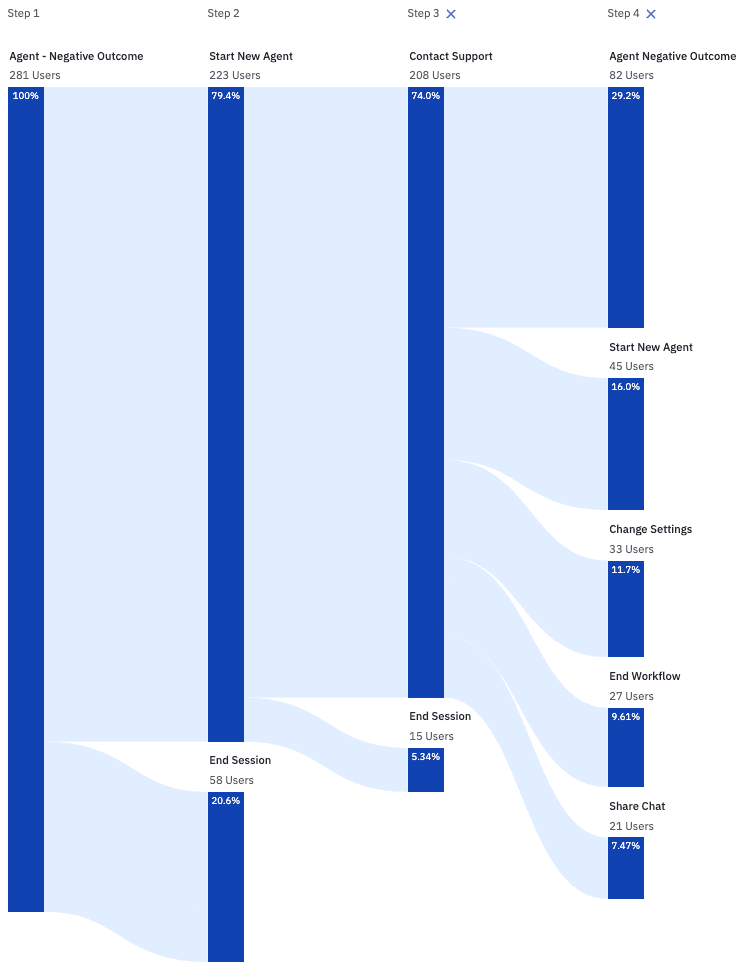

- What do users typically do after the agent fails?

- Which topics yield the most frustrated users?

- Do users sign up for our pro plan at a higher rate after a successful chat conversation?

- What should be the minimum level of confidence in a response to show it to the user?

- Qualitative review: There will always be times when product teams want to analyze user behavior in more detail than just “success” or “failure.” Amplitude natively offers several qualitative analysis tools to complement binary scoring:

- Session Replay: See reconstructions of full user sessions to understand their interaction with a bot or agent. We recommend using quantitative analysis to determine which sessions to watch, then observe replays to get all the details. Some teams even use AI to watch sessions and extract meaningful trends. Teams can use session replay to answer questions like:

- Why did a user exit a chat halfway through?

- How does a user act when the LLM takes too long to respond?

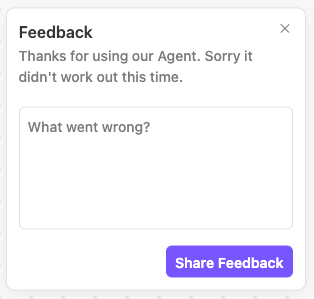

- Surveys: Rather than relying on what happens on the screen, perhaps the best indication of success or failure is the user’s subjective perception. Amplitude offers the ability to survey users on their experience. They can provide a binary pass/fail, rate on a 1-5 scale, or even type open-ended text feedback. NLP can then be used to categorize and analyze text feedback using Amplitude technology that minimizes hallucinations. Teams can now answer questions like:

- When do users score an outcome poorly despite the agent believing it delivered the right outcome?

- Which users expressed frustration with the agent and what did they do next?

- Session Replay: See reconstructions of full user sessions to understand their interaction with a bot or agent. We recommend using quantitative analysis to determine which sessions to watch, then observe replays to get all the details. Some teams even use AI to watch sessions and extract meaningful trends. Teams can use session replay to answer questions like:

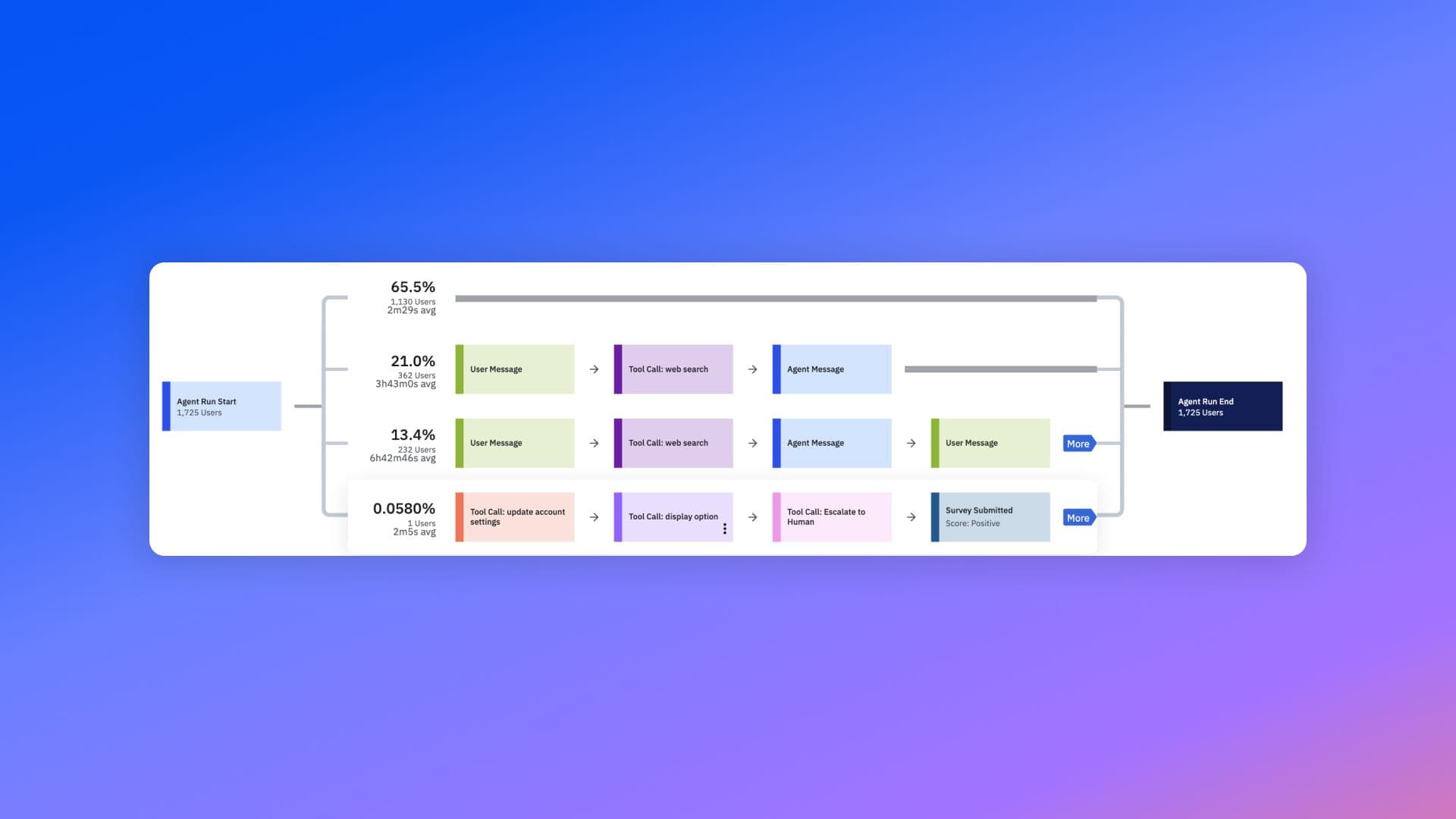

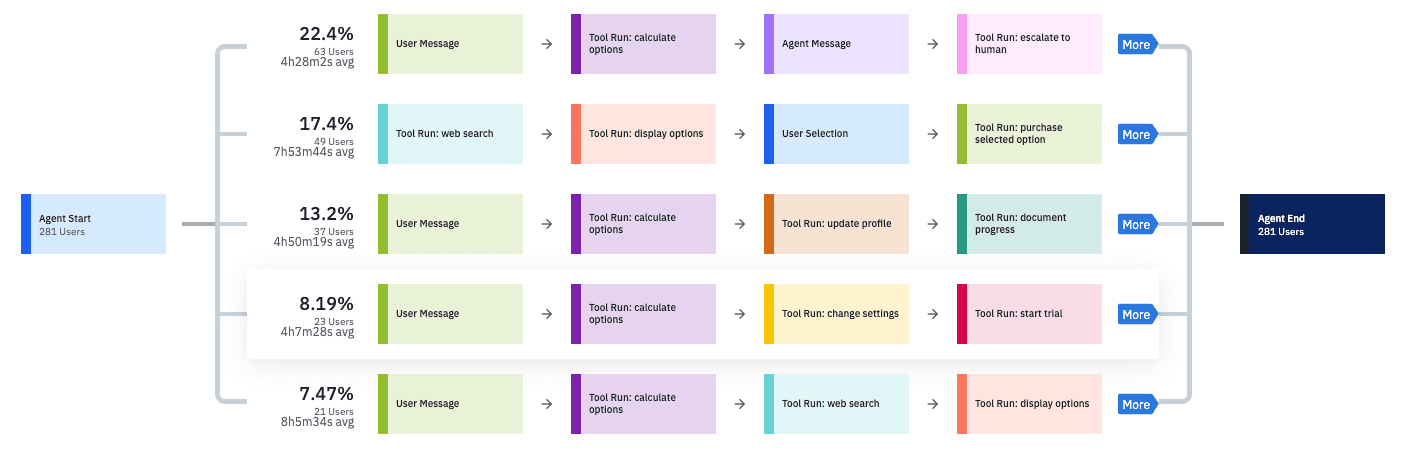

- Tool usage analytics: Amplitude can natively track tool usage within agentic workflows. This provides meaningful insight into which tools are used most, in which order, and what impact that has on the workflow outcome and user satisfaction.

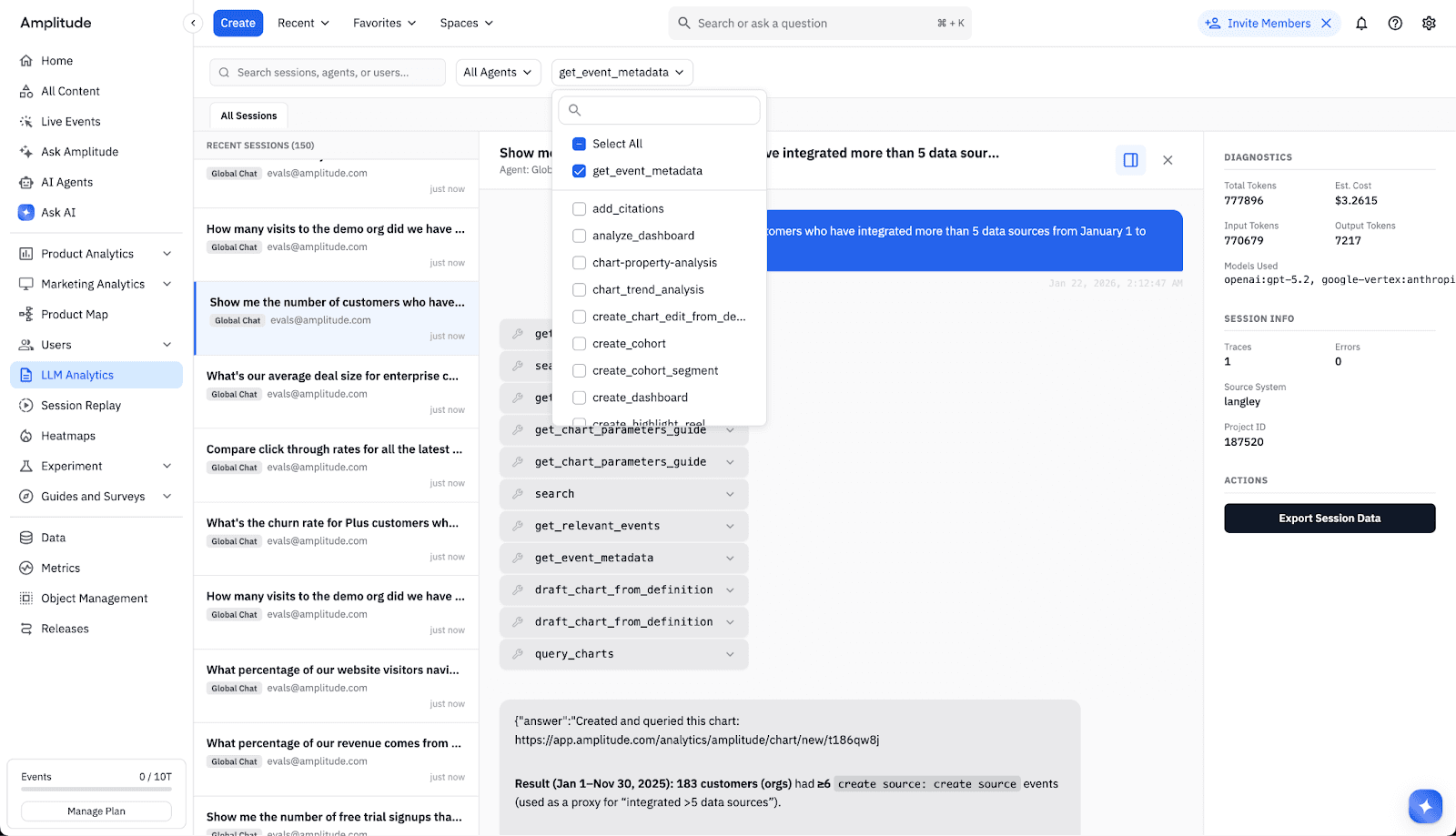

- Cost analytics: AI APIs also report token usage for each request. Amplitude offers prebuilt tooling to capture token usage per prompt and prebuilt reporting to monitor usage by feature or customer. Product teams can now optimize spend and ensure ROI on their AI vendor billing. Teams can now answer questions like:

- What is the average cost per agent run by use case?

- What is the cost impact of rolling out the latest LLM to our customer base?

Challenge 2: LLMs are nondeterministic

It’s impossible to open up LLMs and see how they’re working. They can analyze a nearly infinite number of inputs (model selection, system prompts, chat messages, user context, tools available, model parameters) that affect the quality of output, the cost, and the latency, but the output isn’t predictable.

Solutions:

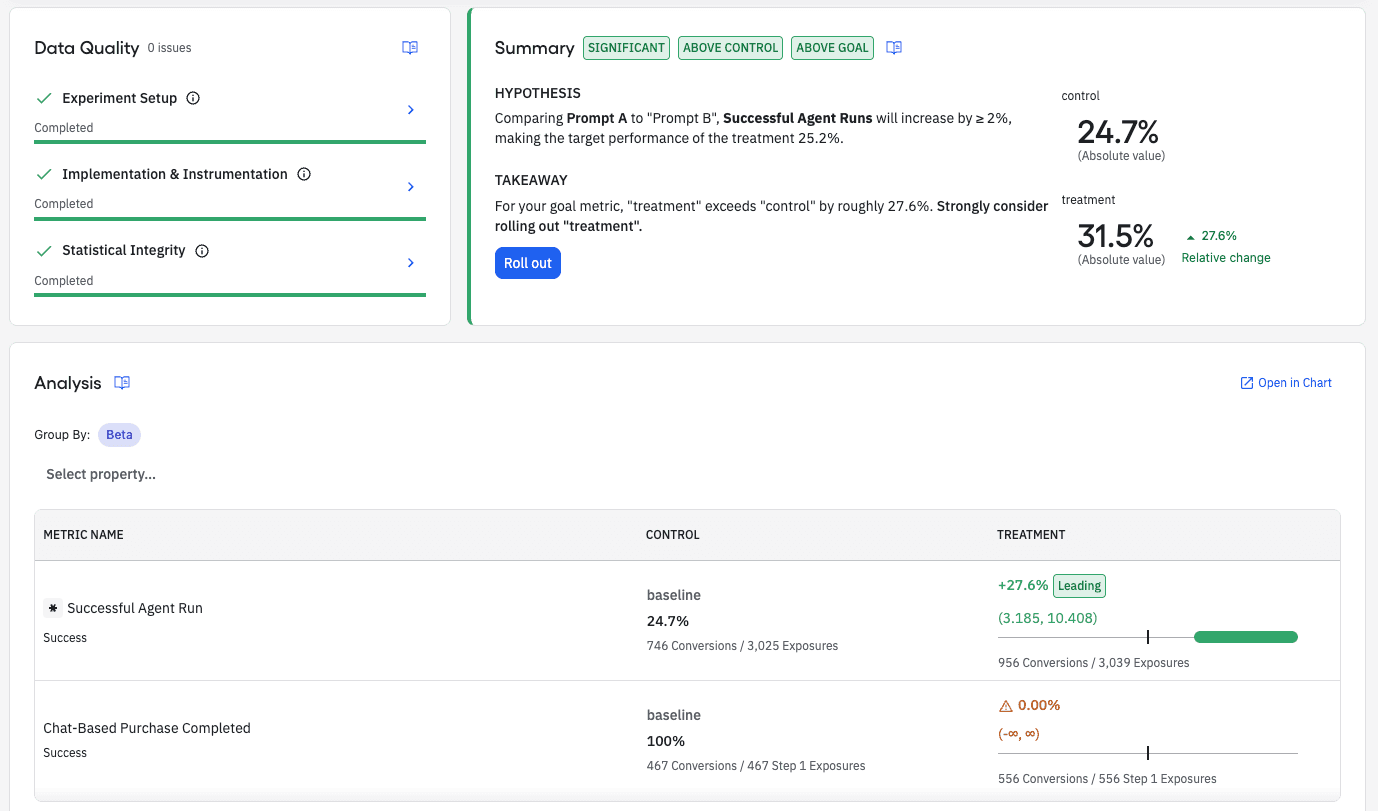

1. Experimentation: AI introduces a new universe of uncertainties and opportunities for optimization. Teams must also choose between AI vendors, models, and system prompts, all of which accommodate feature flags and experiments. There are new pricing/packaging alternatives to test, such as how many free credits to offer or which plans can access certain models. With Amplitude Feature Experiments, engineering teams can replace hard-coded parameters with remotely configured payloads, then run experiments to determine the impact of changes. Teams can now answer questions like which system prompt variant leads to the highest agent satisfaction rate? Or does the delay from using a higher reasoning level produce more successful chats?

2. User context inputs via profile API: LLMs have near-infinite general knowledge but no inherent contextual knowledge of a user’s state or goals. Imagine a support chatbot that doesn’t know what paid plan a user is on, or a flight-booking agent that doesn’t know a user’s loyalty program membership. Amplitude’s User Profile API unites user data from CRM, CDP, DWH, in-app behavioral data (including action recency and frequency), and even Amplitude-powered propensity models. This blended data can be delivered seamlessly to anywhere in your stack for entry into a system prompt with minimal engineering lift.

3. User profile enrichment: Sophisticated product & data teams are also keen to use AI for more advanced use cases in the Data Warehouse. However, there are still challenges in collecting clean, reliable, up-to-date data to train these models. It’s also necessary to have tools in place to act on the model outputs. Amplitude’s two-way integrations with leading warehousing providers (Snowflake, BigQuery, S3, and Databricks) can easily facilitate this. The output of an ML job run in the data warehouse can be imported back to Amplitude to enrich the user’s profile. Once back in Amplitude, that property can be used to target experiments, guides, and surveys. It can also be synced out to ad platforms, marketing automation and messaging tools, CRMs, and more for activation.

Challenge 3: Dependence on external systems

Product builders are now relying on external parties for critical user experiences. Engineering teams will always lose sleep over putting third-party API calls in the critical path of delivering an experience to a user, but for AI-powered features, there’s often no alternative.

Solutions:

- Latency monitoring: Amplitude automatically tracks the latency between a user prompt and an AI response. This opens up opportunities to understand which segments of users or types of prompts are producing the biggest delays. Product teams can set up intelligent alerts to fire if SLAs are breached. These metrics can also inform experiments about model selection, AI vendor performance, or other changes to backend infrastructure.

- Feature flags: If the worst should happen and a team notices a latency spike or an API endpoint becomes unresponsive, feature flags are critical as circuit breakers, enabling an engineering team to quickly swap to a backup infrastructure or vendor.

Taken together, these solutions give product teams the ability to drive improvements to their AI-powered features and apps with full visibility into the impact on responsiveness, response quality, and cost.

With the proliferation of AI, modern product teams need to blend qualitative and quantitative user experience data for a deeper understanding of their customers. They need to experiment on parameters, models, and infrastructure to react to users in real time. They need to collect user context and deliver it anywhere in the stack to create the most personalized experience yet.

Teams that can ship code and learn using tight feedback loops will innovate faster and outperform their competition. Amplitude’s digital analytics platform is uniquely positioned to help teams build this new generation of AI-native products and experiences.

Ken Kutyn

Senior Solutions Consultant, Amplitude

Ken has 8 years experience in the analytics, experimentation, and personalization space. Originally from Vancouver Canada, he has lived and worked in London, Amsterdam, and San Francisco and is now based in Singapore. Ken has a passion for experimentation and data-driven decision-making and has spoken at several product development conferences. In his free time, he likes to travel around South East Asia with his family, bake bread, and explore the Singapore food scene.

More from KenRecommended Reading

Amplitude + Figma: Make What Matters

Feb 20, 2026

4 min read

How Complex Uses AI Agents to Move at the Speed of Culture

Feb 17, 2026

4 min read

The Last Bottleneck

Feb 17, 2026

11 min read

How NTT DOCOMO Scales AI-Powered Customer Experiences

Feb 17, 2026

9 min read